VIDEO: 6 Key Roles of AI in Contact Centers

One of the most important debates on cybersecurity these days is about choosing “the best option,” but does it exist? We always say that there’s no definitive answer, as every organization and network have different needs that need to be met.

EDR (Endpoint Detection and Response) and Virus Protection (or antivirus) are two of the most popular cybersecurity solutions. While both serve the crucial purpose of protecting your devices and data from malicious attacks, they operate on different principles and offer distinct layers of defense. Here are the key differences between the approach they have on key security aspects:

In summary, antivirus software can be considered a critical component of basic cybersecurity, providing protection against known threats. On the other hand, EDR solutions offer a more comprehensive and proactive approach to cybersecurity by detecting and responding to a wider range of threats, including those that are unknown or known.

While many organizations use both antivirus and EDR as part of a layered cybersecurity strategy, EDR is becoming more prevalent to secure endpoints and networks. Technology Navigation can help you determine the option that better serves the cybersecurity needs of your organization. Our support goes until the end of the lifecycle of your solution. Contact us here.

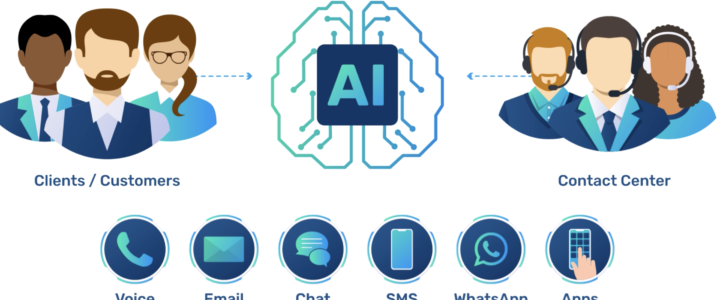

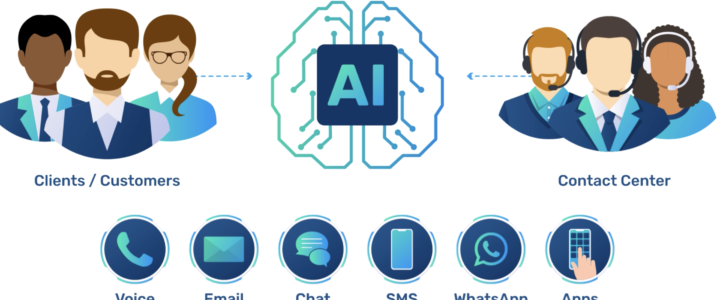

The contact center landscape is undergoing a rapid transformation due to the advent of AI, which is revolutionizing various aspects such as interaction handling, workforce optimization, and training. Some clients are looking to embrace this technology while maintaining their core values and identity; others are afraid of falling behind on all the latest updates; the deniers think that it’s just a trend that’ll pass eventually. While others are still reluctant to believe in all the benefits. Amidst all that confusion, here are some considerations and approaches you need to know about AI for CCaaS:

Contact centers can adopt AI technology by thoughtfully integrating it into their existing infrastructure. By leveraging AI-powered tools and solutions, they can enhance efficiency and effectiveness without compromising their core values. It’s crucial to select AI systems that align with the organization’s principles and goals.

AI has the potential to automate certain tasks traditionally performed by contact center agents. Here are some ways in which AI can replace and/or augment agents:

This is one of the most overlooked powerful punches of AI. While AI enhances training in contact centers, it’s essential to strike a balance between technology and human touch. Contact centers should ensure that AI is used as a tool to augment and support human agents, rather than replacing them.

Contact centers can establish ethical frameworks and guidelines for AI usage. By defining clear boundaries and principles, organizations can ensure that AI technologies are used responsibly, respecting customer privacy, data security and maintaining transparency. Upholding these core values will help contact centers preserve their identity throughout the AI integration process.

By adopting these strategies, contact centers can successfully incorporate AI into their operations while staying true to their core values and identity. Interested in learning more? Contact us to help you determine the best option for you CCaaS.